Free AI Coding for Digital Marketing: Model Context Protocol

I. Executive Summary: The Agentic Revolution in MarTech Development

The landscape of AI-assisted software development is undergoing a fundamental transformation, moving beyond the paradigm of passive code completion to one of active, agentic problem-solving. This shift is characterized by the emergence of AI agents—intelligent programs capable of autonomously pursuing complex goals, making decisions, and executing actions across a developer’s entire workflow. At the heart of this revolution is the Model Context Protocol (MCP), a critical enabling technology that provides a standardized, secure, and universal interface for these agents to interact with the world beyond their training data.

Introduced by Anthropic in late 2024 and rapidly adopted as an open industry standard, MCP functions as a universal adapter, allowing Large Language Models (LLMs) to seamlessly connect with external tools, databases, APIs, and file systems. This protocol effectively gives AI a “phone number” to call for real-time information and a set of hands to perform actions, transforming them from knowledgeable conversationalists into capable digital assistants.

This report provides an exhaustive analysis of the Model Context Protocol, its integration within the Visual Studio Code (VS Code) development environment, and its specific applications for digital marketing and Search Engine Optimization (SEO). The analysis reveals that the true potential of MCP is unlocked not by a single, purpose-built “SEO server,” but by the strategic composition of general-purpose tools to automate complex, multi-step marketing engineering tasks.

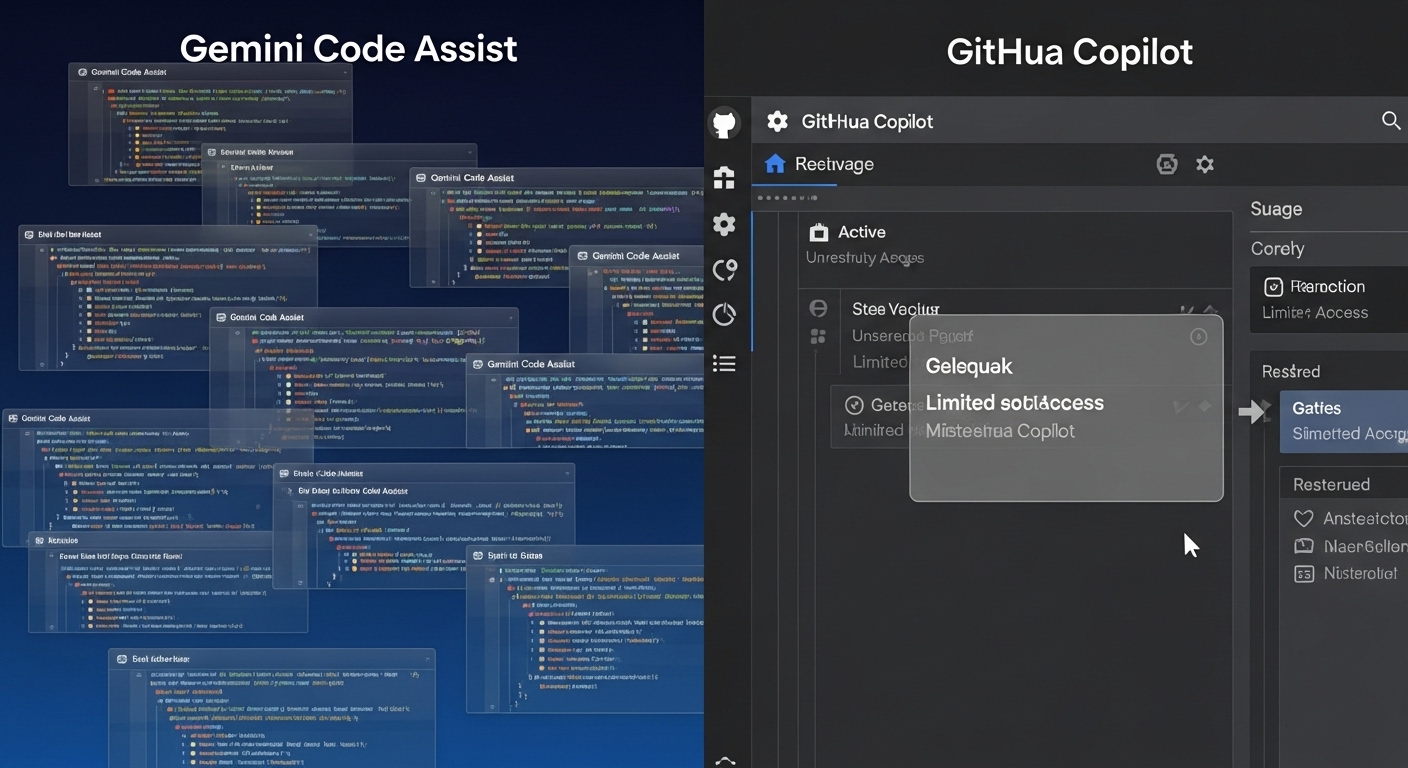

A comparative analysis of the free AI coding assistants that support MCP—the “hosts” for this new ecosystem—is central to this report. The findings indicate a significant disparity between the available options. Google’s Gemini Code Assist for individuals emerges as the superior free platform, offering a generous, high-limit tier with full support for the agentic capabilities required to leverage MCP effectively. In contrast, the GitHub Copilot Free plan is a heavily restricted offering that limits access to the core agentic features necessary for advanced MCP-driven workflows.

For the technical marketer, SEO engineer, or MarTech developer, mastering the MCP ecosystem within VS Code represents a strategic imperative. It provides the framework to automate tasks ranging from the generation of structured data and the implementation of analytics scripts to the technical optimization of Core Web Vitals. Early adoption of this agentic paradigm offers a profound competitive advantage, enabling automation and strategic insight at a scale and speed previously unattainable.

II. Deconstructing the Model Context Protocol: A Universal Adapter for AI

To fully grasp the strategic implications of MCP, a foundational understanding of its design, architecture, and mechanics is essential. MCP is not merely a tool but a carefully architected protocol designed to solve the critical challenge of connecting isolated LLMs to the dynamic, real-world data and systems they need to be truly useful.

2.1. Genesis and Vision: Standardizing AI’s Worldview

The Model Context Protocol was introduced by the AI company Anthropic on November 25, 2024, as an open-source, open-standard framework. Its stated purpose was to standardize the way AI systems, particularly LLMs, integrate and share data with external tools, systems, and data sources. The protocol was conceived to address the persistent problem of information silos and the difficulty of connecting modern AI to legacy systems, business management tools, and complex development environments.

The significance of this initiative was immediately recognized across the industry. In the months following its release, MCP saw rapid adoption by major AI providers, including OpenAI and Google DeepMind, cementing its status as a de facto industry standard. This swift consensus highlights a shared understanding that a common language for AI-tool interaction is a prerequisite for building the next generation of capable AI agents.

MCP’s core value proposition is its ability to replace a fragmented landscape of proprietary solutions, such as early ChatGPT plugins or laborious manual API integrations, with a universal, plug-and-play format. This approach eliminates the need for developers to write custom integration code for every unique pairing of an AI model and an external tool. The protocol’s design was explicitly inspired by the success of the Language Server Protocol (LSP), which decoupled programming language intelligence from specific code editors and fostered a vibrant ecosystem of language-specific tools that work across any compatible IDE. In the same vein, MCP standardizes how to integrate context and tools into the burgeoning ecosystem of AI applications, creating a new, foundational layer in the developer stack. This standardization is not just a technical convenience; it is the catalyst for a new market where “MCP Server Developer” will become a distinct and valuable role, tasked with making diverse platforms and data sources “AI-native” and accessible to a universal ecosystem of agents.

2.2. Architectural Deep Dive: The Three Pillars of MCP

All interactions within the MCP ecosystem are governed by a client-server architecture. This model clearly defines the roles and responsibilities of each component, ensuring a robust and scalable system.

- MCP Host: The host is the user-facing application where the LLM is contained. It serves as the primary point of interaction for the user. Examples of MCP hosts include AI-powered IDEs like Visual Studio Code, Zed, and Cursor, or standalone conversational AI applications like Claude Desktop. When a user enters a prompt that requires external capabilities, the host is responsible for initiating the MCP workflow.

- MCP Client: Residing within the MCP host, the client acts as a crucial intermediary. Its primary function is to translate the LLM’s high-level intent (e.g., “find the latest sales report”) into a standardized, machine-readable MCP request. Conversely, it translates the structured response from an MCP server back into a natural language or data format that the LLM can understand and incorporate into its final answer. The client is also responsible for discovering available MCP servers and managing the connection to them.

- MCP Server: The MCP server is the external service that provides the actual context, data, or capabilities to the LLM. It is the “tool” in the agent’s toolbox. An MCP server can be a simple script that provides access to the local filesystem, a more complex program that connects to a PostgreSQL database, or a robust service that exposes the functionality of a third-party API like GitHub, Stripe, or Google Drive. Developers can create custom MCP servers to connect proprietary systems or specialized data sources, making them instantly compatible with any MCP-enabled host.

2.3. Protocol Mechanics: The Language of Agents

The communication between the client and server is precisely defined by the protocol’s mechanics, ensuring interoperability across different implementations.

- Communication Layer: MCP is built upon JSON-RPC 2.0, a stateless and lightweight remote procedure call (RPC) protocol. The choice of JSON-RPC signifies a design philosophy favoring simplicity, human-readability, and broad compatibility across virtually all modern programming languages.

- Transport Methods: The protocol is transport-agnostic but specifies two primary methods to accommodate different use cases:

- Standard Input/Output (stdio): This method is ideal for local resources, where the MCP server is a command-line process running on the same machine as the host. It offers fast, synchronous communication and is commonly used for tools that interact with the local filesystem or a local Git repository.

- Server-Sent Events (SSE) / Streamable HTTP: This method is preferred for remote resources and communication over the internet. It allows for efficient, real-time, and often asynchronous data streaming from the server to the client. This is the transport used when an agent needs to connect to a cloud-hosted database or a third-party web service.

- Message Exchange: The lifecycle of an MCP connection follows a clear, three-phase process: Initialization, where the client and server handshake and agree on protocol versions; Message Exchange, where the core work is done; and Termination, where the connection is formally closed. During the exchange phase, four primary message types are used:

- Requests: Sent by the client to ask the server for information or to perform an action.

- Results: Sent by the server in response to a request, containing the desired information.

- Errors: Sent by the server when it cannot fulfill a request.

- Notifications: One-way messages that do not require a response, used for status updates or announcements.

2.4. Core Capabilities and Advanced Features: The Agent’s Toolkit

MCP servers can expose a range of capabilities, from simple functions to complex, interactive workflows. These capabilities are the “verbs” that define what an AI agent can do.

- Primary Server Features (Offered to Clients): These are the foundational capabilities that most servers provide.

- Tools: This is the most critical feature, representing executable functions that the AI model can invoke. A tool is defined with a name, a description, and a schema for its parameters.

- Examples include tools/list to discover available functions and tools/call to execute one, such as get_current_stock_price(company=”AAPL”). This is the mechanism that enables agentic action.

- Resources: These are structured data sources that can be read and referenced by the AI. Unlike a tool, which performs an action, a resource provides passive context, such as the contents of a file or a database schema.

- Prompts: These are pre-packaged, templated messages or workflows that a server can offer to the user or AI. This allows server developers to embed domain-specific expertise directly into their tools, guiding the agent toward more effective solutions.

Advanced Client Features (Offered to Servers)

The protocol’s design is sophisticated enough to support bidirectional and interactive communication, where the server can initiate requests back to the client.

- Sampling: This powerful feature allows a server to request an LLM completion from the client’s host. For instance, a code review server analyzing a complex piece of legacy code could ask the agent’s LLM to generate a summary of the code’s function. This enables recursive, agentic behavior where tools can leverage the agent’s own intelligence to complete their tasks.

- Elicitation: This enables a server to pause its operation and request additional information directly from the user via a structured prompt. A GitHub MCP server attempting to create a pull request might use elicitation to ask the user, “Which branch should this be merged into?” This makes workflows more robust and interactive.

- Roots: This is a critical security feature that allows the client to define specific filesystem boundaries for the server. The client can inform the server that it is only allowed to operate within the /user/project/ directory, preventing any accidental or malicious access to sensitive files elsewhere on the system.

The protocol’s design, particularly these advanced features, reveals a deliberate prioritization of client-side control and security. The server can request an LLM completion (Sampling) or user input (Elicitation), but it is the client—the user’s IDE—that controls the LLM, holds the API keys, and presents the final approval dialog to the user. This architecture is fundamental to the protocol’s success, as it allows users to connect to a wide range of third-party servers without ever exposing their private credentials. This client-centric security model is the key that unlocks a trusted, decentralized ecosystem, encouraging the development and adoption of community-built tools.

2.5. Security and Trust Framework: The Necessary Guardrails

The immense power of MCP—enabling external programs to execute code and access data on behalf of a user—necessitates a robust security and trust framework. The protocol specification itself outlines key principles that all implementers are expected to follow.

Key Principles

- User Consent and Control: Users must explicitly consent to all data access and operations. The host application must provide clear user interfaces for reviewing and authorizing any action an agent proposes to take.

- Data Privacy: User data should never be transmitted to a server without explicit consent. Access controls should be strictly enforced.

- Tool Safety: Tool execution is equivalent to arbitrary code execution and must be treated with extreme caution. Host applications must obtain user consent before invoking any tool.

Identified Vulnerabilities

Despite these principles, the protocol is not immune to misuse. In April 2025, security researchers published an analysis highlighting several potential security issues, including prompt injection attacks, permission escalation through the combination of multiple tools, and the ability for malicious “lookalike” tools to silently replace trusted ones. These findings underscore the importance of both careful server implementation and vigilant user oversight.

Enterprise Controls

In a corporate environment, the ability to manage MCP usage is critical. Platforms like GitHub Copilot provide enterprise administrators with a global policy setting to enable or disable MCP integration for all users within their organization. However, the current implementation is a blunt instrument—a simple on/off switch. This has led to calls from the community for more granular, per-server allow/deny lists, which would allow organizations to approve a specific set of trusted internal or official servers while blocking connections to unvetted third-party tools.

III. The Modern IDE as a Marketing Command Center: MCP in Visual Studio Code

The theoretical power of the Model Context Protocol is made practical through its deep integration into modern Integrated Development Environments (IDEs). For the purposes of this report, the focus is on Visual Studio Code, which has emerged as a leading MCP host, providing a comprehensive suite of tools for configuring, managing, and utilizing MCP servers. This integration effectively transforms the code editor from a simple text-manipulation tool into a sophisticated command center for orchestrating AI-driven marketing and development tasks.

3.1. Enabling the Ecosystem: Configuration Deep Dive

The cornerstone of MCP integration in VS Code is the mcp.json file. This declarative configuration file is where users define the servers their AI assistant can connect to.

The mcp.json File

This JSON file contains a servers object where each key is a unique name for a server. The value is an object specifying the server’s configuration, including its transport type (stdio for local servers or http/sse for remote ones), the command to launch it (for stdio), or the URL to connect to (for remote). This file can also contain authentication details, such as headers for bearer tokens or configurations for OAuth flows.

Configuration Hierarchy

VS Code intelligently searches for mcp.json files in multiple locations, loading them in a specific order of precedence. This allows for a flexible and powerful configuration strategy:

%USERPROFILE%.mcp.json:A global configuration for a specific user, making servers available across all projects.<SOLUTIONDIR>.vsmcp.json:A user-specific configuration for a particular solution.<SOLUTIONDIR>.mcp.json:A project-specific configuration that can be tracked in source control, making it ideal for sharing a common set of tools with a development team.

This hierarchical approach is critical for collaborative environments. A MarTech team can define a standard set of MCP servers for database access, browser automation, and analytics in a project’s .vscode/mcp.json file, ensuring that every team member—and the AI agent—has access to the same consistent toolset.

The emergence of multiple configuration standards across different IDEs and tools (.cursor/mcp.json for Cursor, ~/.codex/config.toml for Codex, ~/.gemini/settings.json for Gemini CLI) presents a potential point of friction. While the protocol itself is universal, the method of configuring it is not. This fragmentation may necessitate the future development of a “meta-standard” for configuration, akin to .editorconfig, to ensure seamless portability of toolsets across the entire AI development ecosystem.

3.2. Installation and Management: A Streamlined Workflow

Recognizing that manual JSON editing can be cumbersome, the VS Code team has developed multiple user-friendly methods for discovering and managing MCP servers.

- From the Extensions View: By enabling the chat.mcp.gallery.enabled setting, users can search for

@mcpin the Extensions view to browse and install servers from the official GitHub MCP server registry with a single click. - Via the Command Palette: A suite of commands, accessible via Ctrl+Shift+P, provides a guided interface for server management. Commands like MCP: Add Server, MCP: Browse Servers, and MCP: List Servers allow users to add, discover, and manage their server configurations without directly editing JSON files.

- One-Click Web Installation: Server developers can embed a special

vscode:mcp/install?{json-configuration}URL on their websites. Clicking this link automatically opens VS Code and prompts the user to install the server, dramatically simplifying the onboarding process for new tools. - Programmatic Registration via Extensions: VS Code extensions can act as delivery mechanisms for MCP servers. Using the

vscode.lm.registerMcpServerDefinitionProviderAPI, an extension can programmatically register one or more servers. This is the method used by Microsoft to deliver the official Azure MCP Server, which is bundled within the GitHub Copilot for Azure extension.

3.3. Activating Agentic Capabilities: Interacting with Tools

Once servers are configured, they are made available to the AI assistant through its “Agent Mode.”

- Agent Mode: This is a specific mode within the assistant’s chat interface (e.g., GitHub Copilot Chat or Gemini Code Assist) designed for complex, multi-step tasks that require tool use.

- Tool Picker and Invocation: In Agent Mode, a “Tools” button reveals a list of all available tools from all currently active MCP servers. The user can enable or disable specific tools for the duration of a conversation, giving them granular control over the agent’s capabilities. When given a prompt, the agent’s underlying LLM determines which tool (or sequence of tools) is needed to fulfill the request and automatically invokes it.

- User Confirmation: Security is paramount.

Before a tool that modifies files or executes commands is run, the user is presented with a confirmation dialog detailing the exact action the agent wishes to take. The user can approve the action once, always for the current session, always for the current workspace, or always, providing a tiered system of trust.

- Direct Invocation: For more precise control, a user can directly instruct the agent to use a specific tool by referencing it in the prompt with a # prefix, such as Use #fs/readFile to get the contents of package.json.

This comprehensive integration layer—spanning configuration, installation, management, and execution—signals a profound evolution in the role of the IDE. It is no longer just a place to write code; it is becoming the operating system for AI development. The IDE manages the “applications” (MCP servers), provides the “kernel” (the MCP host and client), and offers the “user interface” (Agent Mode) for interacting with them. Consequently, developer productivity is expanding beyond the metric of code written to encompass the ability to design, orchestrate, and manage sophisticated AI agent workflows directly within this powerful new environment.

IV. Comparative Analysis: Free AI Coding Assistants for the Modern Marketer

The utility of the entire MCP ecosystem, particularly for users seeking free solutions, hinges on the capabilities and limitations of the AI assistant acting as the MCP host. The most powerful and relevant MCP server is rendered ineffective if the agent that uses it is constrained by low usage limits or lacks true agentic functionality. A detailed comparison of the free tiers offered by the two leading platforms in VS Code—Google’s Gemini Code Assist and GitHub Copilot—reveals a clear and decisive winner for the technical marketer.

4.1. The Gatekeepers: Why the Assistant’s Free Tier Matters Most

The AI assistant is the gatekeeper to the world of MCP. Its free tier dictates the frequency, complexity, and scope of tasks that can be automated. For digital marketing and SEO tasks, which often involve iterative analysis, multi-step processes (e.g., audit, analyze, implement, verify), and interaction with multiple files and services, a restrictive free tier can be a significant bottleneck. Therefore, the analysis must prioritize request limits, support for a true “agent mode,” and the quality of MCP integration.

4.2. Gemini Code Assist (for individuals): The Unrestricted On-Ramp

Google has positioned its free offering, Gemini Code Assist for individuals, as a robust, full-featured tool designed for professional use, likely as a strategic move to capture developer mindshare and build a user base for its ecosystem.

- Generous Free Tier: The service is available at no cost and does not require a credit card to sign up.

- High Usage Limits: The free tier comes with exceptionally high usage limits that are more than sufficient for professional development workflows. Sources cite limits of 60 requests per minute and 1,000 requests per day for agent mode and CLI interactions, while other documentation mentions up to 6,000 code-related requests and 240 chat requests daily. This generosity allows for the kind of complex, iterative, and multi-tool interactions that advanced SEO tasks require.

- Full Agentic and MCP Support: Crucially, the free tier includes full, unrestricted access to “agent mode.” This mode is explicitly designed to handle complex, multi-step tasks by integrating with both built-in system tools and the broader ecosystem of external tools via the Model Context Protocol. This is the core functionality the user query is predicated on.

- Large Context Window: The free version is powered by models with a 1 million token context window, enabling the agent to understand and process large codebases, multiple files, and extensive documentation simultaneously, leading to more relevant and accurate responses.

4.3. GitHub Copilot (Free Plan): A Gated Experience

In contrast, the GitHub Copilot Free plan is structured as a limited trial or a basic entry point, designed to showcase a fraction of the platform’s capabilities and encourage an upgrade to a paid subscription.

- Limited Free Tier: The free plan is a more recent addition to the Copilot family, providing a taste of the service.

- Restrictive Usage Limits: The limits are drastically lower than Gemini’s and are measured on a monthly basis. The plan includes only 50 agent mode or chat requests per month and 2,000 code completions per month. A limit of 50 agentic requests per month is insufficient for any serious development workflow, as a single complex task could easily consume a significant portion of this allowance.

- Agent Mode Functionality: There is a critical ambiguity in the documentation regarding agent mode. While the main pricing page mentions “50 agent mode or chat requests”, more detailed plan comparisons and feature breakdowns explicitly state that the full “Copilot coding agent” and “Agent mode” are not included in the free plan. This suggests that the 50 “agent mode” requests are likely a highly restricted form of multi-turn chat rather than the autonomous, tool-using agent required for MCP workflows.

- MCP Support: The utility of MCP is intrinsically tied to a capable agent mode. Since the free plan lacks a true agent mode, its support for MCP is effectively moot for the user’s intended purpose. While the GitHub MCP server itself is technically available to all GitHub users, its powerful tools for interacting with repositories are designed to be used by the Copilot agent, a feature gated behind a paid plan.

4.4. Alternative Free Solutions: The DIY Approach

For users who are technically inclined and wish to avoid the limitations of the GitHub free plan without committing to the Google ecosystem, a third path exists. Open-source VS Code extensions like “Continue” can be manually configured to connect to various LLM backends. By pairing such an extension with a free-tier API from a provider like Groq, which offers fast inference on powerful open-source models, a user can construct a completely free and unlimited AI assistant. However, this approach requires significantly more setup, lacks the polished user experience and deep IDE integration of the first-party solutions, and places the burden of maintenance and updates on the user.

4.5. Comparative Analysis Table

The strategic decision of which free platform to adopt is clarified by a direct, data-driven comparison of their core offerings relevant to agentic development.

| Feature | Gemini Code Assist (for individuals) | GitHub Copilot (Free Plan) |

|---|---|---|

| Cost | $0 (No Credit Card Required) | $0 |

| Agent/Chat Request Limits | High (e.g., 1000 requests/day) | Very Low (50 requests/month) |

| Code Completion Limits | High (e.g., 6000 requests/day) | Low (2000 completions/month) |

| Full Agent Mode Support | Yes, fully supported | No / Highly Limited |

| MCP Integration in Free Tier | Fully Supported & Encouraged | Effectively Unavailable (Requires Agent Mode) |

| Context Window | 1,000,000 Tokens | Not Specified (Likely Smaller) |

| Access to Advanced Models | Yes (Gemini 2.5) | Yes (e.g., Claude 3.5 Sonnet, GPT-4.1) |

The stark differences in the free tiers are not accidental but reflect divergent corporate strategies in the ongoing AI platform war. Google’s approach with Gemini Code Assist is one of aggressive user acquisition and ecosystem building. By offering a powerful, high-limit free tool, Google aims to make Gemini the default assistant for a generation of developers, creating network effects and establishing a user base that can later be integrated more deeply with its paid Google Cloud services. Conversely, Microsoft and GitHub, already possessing a dominant position in the developer ecosystem, can afford a more traditional SaaS model, using a restrictive free tier primarily as a marketing funnel to drive subscriptions for their paid Copilot Pro and Enterprise plans. For the end-user, this strategic battle creates a clear opportunity: to leverage Google’s current investment in ecosystem growth to access professional-grade agentic AI capabilities at no cost.

V. The MCP Server Ecosystem: A Toolkit for Marketing Automation

With a capable AI agent selected and configured in VS Code, the next step is to equip it with the right tools. The Model Context Protocol has catalyzed the rapid growth of a diverse ecosystem of servers, turning the AI agent into a versatile digital artisan. For the technical marketer, the key is not to search for a single, monolithic “SEO server,” but to understand how to compose a set of foundational, general-purpose servers into a powerful toolkit for automating marketing workflows.

5.1. Navigating the Landscape: Discovering Tools

The decentralized nature of the MCP ecosystem means that servers are distributed across several key locations. A developer looking to find new tools should consult these primary sources:

- Official GitHub Repositories: The modelcontextprotocol organization on GitHub hosts the core specification, SDKs, and a repository named servers that contains official and reference implementations for fundamental tools. This is the best starting point for stable, well-documented servers.

- Community Registries and Marketplaces: As the ecosystem has grown, several community-driven registries have emerged to aggregate and categorize servers. Prominent examples include MCP Market (mcpmarket.com), Awesome MCP Servers (mcpservers.org), and the Cursor MCP Directory (cursor.directory/mcp). These sites are invaluable for discovering new and niche tools built by the community.

- The VS Code Marketplace: As noted previously, the VS Code Extensions view can be filtered with the @mcp search term to find servers that are packaged as VS Code extensions for easy, one-click installation.

5.2.

Categorization of Key Servers for Digital Marketing

An analysis of the available servers reveals that they cluster into several functional categories. The true power for a digital marketer comes from understanding how these categories map to the capabilities needed for technical SEO and marketing automation.

-

Web Automation & Scraping

This is arguably the most critical category for technical SEO. These servers give an AI agent control over a real or headless web browser, enabling it to interact with live websites.

- Key Servers: Puppeteer (Reference), Playwright (Official/Community), Browserbase (Official), Firecrawl (Official), Skyvern (Community).

- Marketing Relevance: These tools are indispensable for tasks like:

- Performing automated technical SEO audits on a live website.

- Scraping competitor websites for on-page SEO factors, structured data, or keyword usage.

- Running performance tests to measure Core Web Vitals.

- Verifying that analytics and tracking scripts have been correctly implemented and are firing as expected.

-

Data & File System Access

These servers form the foundation of any workflow that involves reading project data or writing generated code. They are the agent’s hands for interacting with the local development environment.

- Key Servers: Filesystem (Reference), Git (Reference), PostgreSQL (Reference), SQLite (Reference), Google Drive (Reference), MySQL (Community).

- Marketing Relevance: An agent equipped with these servers can:

- Read source code files (.html, .js, .css) to analyze and modify them.

- Query a product database to extract information needed for generating schema.org markup.

- Create, modify, or delete files like robots.txt or sitemaps.

- Interact with a Git repository to review changes or create new branches.

-

Content & Search

These servers ground the AI agent with real-time information from the web, allowing it to move beyond its static training data.

- Key Servers: Brave Search (Reference), Exa (Official), GPT Researcher (Community), Google Maps (Reference).

- Marketing Relevance: These capabilities enable an agent to:

- Research current SEO best practices or algorithm updates.

- Find up-to-date examples of schema.org implementation for a specific content type.

- Gather factual data or statistics to be included in AI-generated marketing copy.

- Analyze search engine results pages (SERPs) for competitive analysis.

-

Development & API Tools

These servers allow the agent to interact with common developer platforms and services.

- Key Servers: GitHub (Reference), Postman (Official), Cloudflare (Official).

- Marketing Relevance: While more developer-focused, these have marketing applications. The GitHub server can automate repository management tasks. The Postman server could be used to test marketing automation APIs or verify endpoint functionality. The Cloudflare server could be used to manage redirects or page rules that have SEO implications.

The current state of the MCP ecosystem reveals a crucial truth: the path to marketing automation is through composition, not specialization. A technical marketer will not find a single server that “does SEO.” Instead, they will achieve their goals by developing the skill of workflow orchestration—decomposing a high-level marketing objective into a sequence of discrete tasks and assigning each task to an agent equipped with the appropriate general-purpose tool. This represents a significant evolution in the skill set required for technical marketing, shifting the focus from manual coding to the strategic design and management of AI-driven workflows.

Strategic Implementation Guide: Leveraging MCP for Advanced SEO and Digital Marketing

This section translates the foundational knowledge of MCP, VS Code integration, and the server ecosystem into practical, actionable workflows. Each of the following subsections outlines a common digital marketing or SEO task and provides a detailed, prompt-driven guide on how to automate it using a free Gemini Code Assist agent equipped with a combination of MCP servers.

Automating Structured Data (Schema.org) Generation

Objective: To automatically generate accurate JSON-LD Product schema for a series of product pages by pulling data directly from a local SQLite database. This eliminates manual data entry and reduces the risk of human error.

Required MCP Servers:

- SQLite Server (Reference): To query the product database.

- Filesystem Server (Reference): To write the generated schema files to the project directory.

Workflow:

- Configuration: In the project’s .vscode/mcp.json file, configure the SQLite and Filesystem servers. The SQLite server will need the path to the database file.

- Contextual Prompting: Provide the Gemini agent with a clear example of the target JSON-LD Product schema format to ensure the output is structured correctly.

- Agent Prompt: Open the Agent Mode chat in VS Code and issue a multi-step prompt:

“Your task is to generate Schema.org JSON-LD for our products.

- Using the #sqlite/query tool, connect to the database at ./data/products.db.

- Execute the following SQL query to retrieve all products:

SELECT id, name, description, price, image_url FROM products; - For each product returned by the query, generate a valid JSON-LD script block for a Product schema. The schema must include @context, @type, name, description, image, and an offers object with @type, priceCurrency, and price.

- Using the #fs/createFile tool, save each generated JSON-LD script into a separate file within the ./public/schemas/ directory. The filename should be product_{id}.json, where {id} is the product’s ID from the database.

Begin execution and show me the first generated file for verification before proceeding with the rest.”

This workflow demonstrates the agent’s ability to act as a data pipeline, seamlessly integrating database access with code generation and file system operations, a task that would typically require a custom script.

Enhancing On-Page SEO: robots.txt and Meta Tag Automation

Objective: To audit the project’s robots.txt file for common SEO mistakes and then generate contextually relevant meta tags for a specific HTML page.

Required MCP Servers:

- Filesystem Server (Reference): To read and write files within the project.

Workflow:

- Configuration: Ensure the Filesystem server is enabled in the agent’s tool picker.

- Agent Prompt:

“Perform an on-page SEO audit.

- Using the #fs/readFile tool, read the contents of the robots.txt file located in the project root.

- Analyze the file against SEO best practices. Specifically, check if it is blocking any critical resources like CSS or JavaScript files, and ensure the sitemap URL is correctly specified. Provide a report of your findings and suggest any necessary changes.

- Next, use #fs/readFile to read the content of ./src/about-us.html.

- Based on the page’s content, generate an optimized <title> tag (under 60 characters) and a compelling <meta name=”description”> tag (under 160 characters).

- Show me the proposed robots.txt changes and the new meta tags for my approval before you modify any files.”

This process leverages the LLM’s vast knowledge of SEO principles, applying it directly to the project’s files via the Filesystem server. It automates a routine but critical aspect of on-page optimization.

Streamlining Analytics and Tracking Implementation

Objective: To correctly implement a Google Analytics 4 (GA4) tracking script across all HTML pages in a project and then verify that the script is firing correctly on a local development server.

Required MCP Servers:

- Filesystem Server (Reference): To modify the HTML files.

- Playwright MCP Server (Community/Official): To launch a browser and inspect network activity.

Workflow:

- Configuration: Configure both the Filesystem and Playwright servers in mcp.json.

- Agent Prompt:

“Your task is to install and verify our Google Analytics 4 tracking tag. The Measurement ID is G-ABC123XYZ.

- Using the filesystem tools, find all .html files within the ./dist/ directory.

- For each file, read its content and insert the standard GA4 gtag.js script snippet, configured with our Measurement ID, immediately after the opening <head> tag. Do not add the script if it already exists.

- Once all files are updated, use the #playwright/goto tool to navigate to http://localhost:8080.

- Use Playwright’s network inspection capabilities to confirm that a request is successfully sent to a URL containing googletagmanager.com.

- Report back with the list of modified files and the result of the network verification.”

This advanced workflow bridges the gap between code modification and quality assurance. The agent not only performs the implementation but also validates its own work, significantly increasing confidence and reducing debugging time.

Optimizing Core Web Vitals (CWV)

Objective: To diagnose and fix a Cumulative Layout Shift (CLS) issue, a key Core Web Vital metric that impacts user experience and search rankings.

Required MCP Servers:

- Playwright MCP Server (Community/Official): To run a performance audit on a live page.

- Filesystem Server (Reference): To read and modify the source code.

Workflow:

- Configuration: Ensure both Playwright and Filesystem servers are active.

- Agent Prompt:

“We need to improve the Core Web Vitals for our product page.

- Using the Playwright server, launch a browser and run a performance audit on http://localhost:8080/product/detail/123.

- Report the LCP, INP, and CLS scores.

- If the CLS score is above 0.1, identify the specific DOM element that is causing the largest layout shift.

- “

Once the problematic element is identified (e.g., an image without dimensions), use the filesystem tools to locate and read the relevant HTML and CSS files (./src/products/detail.html and ./css/product.css).

- Propose a specific code change to fix the CLS issue. For an image, this would be adding explicit width and height attributes.

- Present the code change to me as a diff for approval before applying it.

This workflow exemplifies the pinnacle of agentic development. The agent acts as a performance engineer, using a specialized tool to diagnose a problem, using its own knowledge to devise a solution, and then using another tool to implement the fix, all while keeping the human developer in the loop for final approval.

Mapping MCP Servers to Digital Marketing Use Cases

The following table serves as a strategic quick-reference guide, synthesizing the detailed workflows into a compact playbook for applying MCP to common digital marketing tasks.

| Marketing Task | Required Capability | Recommended MCP Server(s) | Example AI Prompt Snippet |

|---|---|---|---|

| Generate Product Schema | Database Access & File Writing | PostgreSQL or SQLite + Filesystem | “Using #db/query, get product data… then using #fs/createFile, write the JSON-LD schema…” |

| Create/Audit robots.txt | File System Read/Write | Filesystem | “Using #fs/readFile, analyze robots.txt for SEO best practices and suggest improvements…” |

| Implement GA4 Tracking | File Editing & Live Site Verification | Filesystem + Playwright | “Using filesystem tools, inject the GA4 script… then use #playwright/goto to verify network requests…” |

| Audit Core Web Vitals | Live Site Audit & Code Modification | Playwright or Puppeteer + Filesystem | “Using Playwright, audit CLS on the page… identify the shifting element… then use filesystem tools to read the code and propose a fix…” |

Future Outlook and Strategic Recommendations

The Model Context Protocol and the rise of agentic AI represent a fundamental inflection point in software development, with profound implications for the digital marketing industry. The ability to automate complex, context-aware tasks directly within the IDE is not an incremental improvement but a paradigm shift. This final section provides a forward-looking analysis of the ecosystem’s trajectory and offers strategic recommendations for adoption.

The Trajectory of Agentic MarTech

The MCP ecosystem is still in its early stages, but its trajectory points toward increasing sophistication and integration.

- Maturation of the Ecosystem: The number and variety of MCP servers will continue to grow at an accelerated pace. While the current landscape is dominated by foundational developer tools, the future will likely see the emergence of more specialized, high-level servers. Major marketing and analytics platforms (e.g., HubSpot, Salesforce, Segment, Google Analytics) are prime candidates to release official MCP servers, allowing AI agents to interact directly with their APIs and data, creating a more seamlessly interconnected MarTech stack.

- Evolution of Developer Roles: As predicted, the role of the technical marketer will continue to evolve from that of a pure coder to an AI agent orchestrator. The most valuable skills will be the ability to deconstruct complex marketing goals into logical, automatable workflows, select the appropriate combination of MCP tools, and craft effective prompts to guide the AI agent. This higher level of abstraction will allow technical marketers to focus more on strategy and less on boilerplate implementation.

- Standardization and Interoperability: The current fragmentation in MCP configuration files across different hosts is a point of friction that will likely be addressed as the ecosystem matures. A community-driven standard for defining and sharing server configurations, analogous to .editorconfig, could emerge to enhance the portability of agentic workflows across different IDEs and command-line tools.

Recommendations for Adoption

For a technical marketer or SEO engineer looking to leverage this new paradigm, a strategic and phased approach is recommended.

- Platform Choice: Based on the comprehensive analysis in this report, the primary recommendation is to begin with Google’s Gemini Code Assist (for individuals). Its robust, high-limit free tier and full, uncompromised support for agentic, MCP-driven workflows in VS Code make it the ideal and most powerful entry point into this ecosystem at no cost.

- Phased Implementation: Adoption should begin with low-risk, read-only tasks to build familiarity and trust with the AI agent. Use filesystem and search servers to analyze code, audit on-page factors, or research topics. Once comfortable, progress to more complex workflows that involve writing or modifying code, always ensuring that the agent’s proposed changes are carefully reviewed before approval.

- Embrace Composition: Adopt a mindset of composing simple, general-purpose tools to achieve complex goals. Rather than waiting for a perfect, all-in-one “SEO server,” focus on mastering the core toolkit: filesystem access, browser automation, and database interaction. This compositional approach is more flexible, powerful, and aligned with the current state of the MCP ecosystem.

- Prioritize Security: The power to automate comes with responsibility. Always maintain a security-first posture. Carefully scrutinize every action an agent proposes, especially those involving file modifications, command execution, or external API calls. Where possible, leverage protocol security features like roots to explicitly limit an agent’s filesystem access to the current project directory.

Concluding Analysis

The Model Context Protocol is not merely an incremental addition to the developer’s toolkit; it is the architectural foundation for a new era of software development and automation. It provides the standardized language necessary for AI to move from being a passive assistant to an active collaborator.

For professionals in the technical marketing and SEO domains, this technology offers a clear path to automating the intricate, repetitive, and data-intensive tasks that define their work. The ability to programmatically diagnose a Core Web Vitals issue, query a database for product information, generate corresponding structured data, and implement the necessary code changes—all through a conversational interface—represents a monumental leap in productivity and strategic capability.

The current landscape provides a clear and accessible starting point. The combination of Visual Studio Code as a host, Google’s Gemini Code Assist as a powerful and free AI agent, and the growing ecosystem of open-source MCP servers creates an unprecedented opportunity. By embracing this agentic paradigm today, technical marketers can begin building the future-critical skills of AI workflow orchestration, securing a significant and lasting competitive advantage in an industry on the cusp of profound change.