AI WordPress SEO Automation: REST API, Gemini & Copilot CLI

Executive Summary

The discipline of Search Engine Optimization (SEO) is undergoing a fundamental paradigm shift, moving away from manual, interface-driven tasks toward programmatic, AI-augmented automation. This report provides a comprehensive architectural blueprint for constructing a sophisticated, scalable, and automated SEO engine for the WordPress platform. The core of this architecture is a powerful triad of technologies: the WordPress REST API, which serves as the programmable control plane; Google’s Gemini CLI, which functions as an advanced content intelligence and generation engine; and GitHub Copilot CLI, which acts as an agentic development partner for scripting and executing the automation workflows.

The central thesis of this analysis is that the strategic integration of these three components transforms SEO from a reactive, labor-intensive process into a proactive, data-driven, and highly efficient discipline. By leveraging the WordPress REST API, developers and SEO professionals can gain direct, programmatic access to the critical data points that influence search rankings. When combined with the natural language processing and content generation capabilities of Gemini CLI and the code generation prowess of GitHub Copilot CLI, this access unlocks the potential for unprecedented levels of automation.

Key findings within this report underscore the critical importance of foundational architectural decisions. The choice of a WordPress SEO plugin, for instance, is no longer a mere feature comparison but a strategic decision that dictates the viability of the entire automation workflow. The analysis reveals that plugins with a robust, read/write REST API, such as All in One SEO (AIOSEO), provide a direct and efficient path to programmatic control. In contrast, plugins with read-only APIs necessitate more complex workarounds. Furthermore, the report establishes a clear and effective division of labor between the two AI tools: Gemini CLI is best utilized for its strengths in content analysis and generation (the “what”), while GitHub Copilot CLI excels at constructing the operational scripts and API calls that execute the strategy (the “how”).

This document is intended for a technical audience, including WordPress developers, technical SEO specialists, and systems architects. It provides not only the strategic framework but also the detailed, actionable code examples and workflows necessary to implement a complete, AI-powered SEO optimization system. The recommendations and methodologies outlined herein are designed to serve as a definitive guide for building a more intelligent, automated, and impactful SEO presence on the WordPress platform.

I. The Programmable SEO Layer: Mastering the WordPress REST API

The foundation of any scalable automation strategy for WordPress is its native Representational State Transfer (REST) Application Programming Interface (API). This API transforms a WordPress installation from a monolithic content management system into a programmable platform, providing the essential control plane for all subsequent AI-driven optimization tasks. A deep understanding of its structure, security requirements, and the specific endpoints relevant to SEO is a non-negotiable prerequisite for successful implementation.

1.1. Deconstructing the Core API for SEO Applications

The WordPress REST API provides a standardized set of endpoints for interacting with core data types, using standard HTTP methods such as GET (retrieve data), POST (create or update data), PUT (update data), and DELETE (remove data). These interactions are structured around JSON (JavaScript Object Notation), a lightweight and human-readable data format. The default namespace for core WordPress data is /wp-json/wp/v2/, which serves as the base for accessing posts, pages, users, media, and other fundamental resources.

For the purpose of SEO automation, several key endpoints are of primary importance. The schemas of these endpoints contain specific fields that can be programmatically manipulated to directly influence on-page SEO factors.

- /posts and /pages: These are the most critical endpoints, representing the primary content of the website. The object schema for a single post or page includes several writable fields that are directly relevant to SEO:

- title: The post title, which is typically used to generate the HTML <title> tag.

- slug: The URL-friendly version of the title, which forms a key part of the post’s permalink.

- excerpt: A hand-crafted summary of the post. While not always used for the meta description by default, it can be programmatically populated and then used by themes or SEO plugins for that purpose.

- meta: This is arguably the most powerful field for SEO automation. It acts as a gateway to update custom fields, also known as post metadata. Nearly all SEO plugins store their specific data—such as custom SEO titles, meta descriptions, schema settings, and social media tags—in the wp_postmeta database table. The meta field in the REST API provides a direct channel to read and write this data, provided the fields are properly registered.

- /media: This endpoint controls the site’s media library. For image SEO, the ability to programmatically update the alt_text, caption, and description fields for media items is crucial for accessibility and for providing search engines with context about the image content.

It is also essential to recognize that many modern WordPress sites utilize Custom Post Types (CPTs) for structured content like products, events, or portfolios. To ensure these CPTs are accessible and controllable via the automation workflow, they must be registered with the ‘show_in_rest’ => true argument. This makes them available through the API at an endpoint like /wp-json/wp/v2/<cpt_slug>, mirroring the structure of the default /posts endpoint.

1.2. Authentication and Security: The Gateway to Automation

While the WordPress REST API allows for unauthenticated GET requests to public data, any action that modifies or creates content (POST, PUT, DELETE requests) requires secure authentication. Leaving these write-enabled endpoints unsecured would create a significant attack vector, exposing the site to unauthorized content injection, data modification, or deletion. Therefore, implementing a robust authentication strategy is the first step in building a secure automation pipeline.

Several authentication methods are available, but for server-to-server scripting—the primary use case for this report—the most direct and recommended approach is Application Passwords.

- Application Passwords: This feature, built into WordPress core, allows for the creation of unique, application-specific passwords for any user account. These passwords can be generated within the user’s profile settings and can be revoked at any time without affecting the user’s main login password. They are designed specifically for API authentication and are used with the Basic Authentication scheme. A script would include an Authorization header with the value Basic followed by a Base64-encoded string of username:application_password.

While other methods like OAuth2 exist and are leveraged by platforms like WordPress.com for third-party application access, they introduce a level of complexity that is often unnecessary for the backend automation scripts central to this architecture.

A core tenet of API security is the Principle of Least Privilege. API requests are authenticated as a specific WordPress user, and they inherit that user’s permissions. It is a severe security risk to use an Administrator account’s credentials for automation scripts. A breach of the script or its environment would grant the attacker full control over the entire WordPress site. The best practice is to create a dedicated user account specifically for the API automation, assigning it a role with only the necessary permissions (e.g., Editor, which can create and edit posts but cannot manage plugins or users). This strategy drastically reduces the potential damage if the API credentials are ever compromised. Additionally, all API traffic must be encrypted using SSL/HTTPS to protect credentials and data from interception.

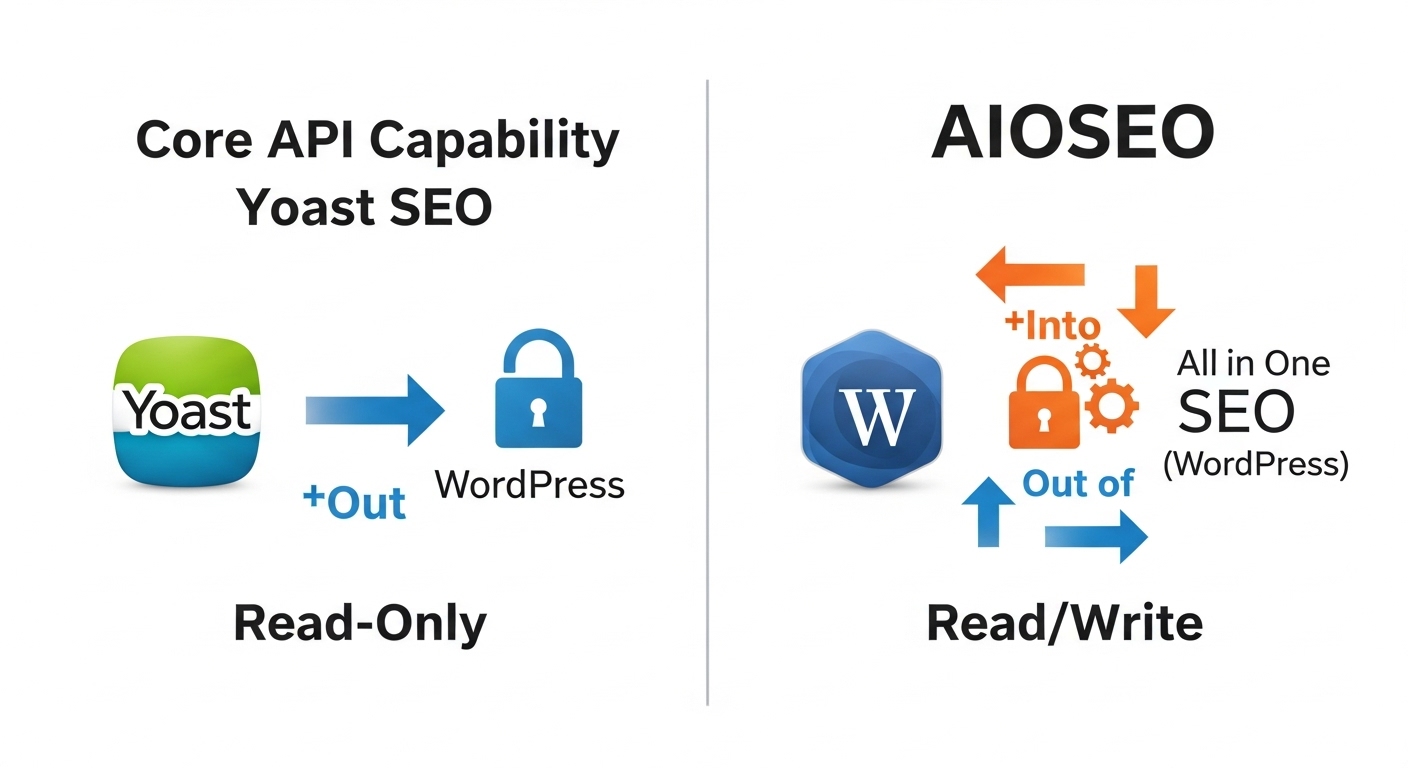

1.3. The Critical Fork in the Road: AIOSEO vs. Yoast API Extensions

The choice of an SEO plugin is a fundamental architectural decision that profoundly impacts the design and complexity of an automation workflow. The two market-leading plugins, Yoast SEO and All in One SEO (AIOSEO), have fundamentally different philosophies regarding their REST API implementations, creating a critical divergence for developers.

- Yoast SEO REST API: The API provided by Yoast SEO is primarily read-only. It is architected to serve SEO metadata to headless WordPress frontends. When queried, the native WordPress /posts endpoint is appended with two fields: yoast_head (a prefabricated HTML blob of all meta tags) and yoast_head_json (the raw data in a JSON object). While this is excellent for consuming data, the API does not provide native endpoints for programmatically updating this SEO data via POST or PUT requests. This means that out-of-the-box, one cannot use the Yoast API to change an SEO title or meta description.

- AIOSEO REST API: In stark contrast, AIOSEO is built with a comprehensive, read/write REST API designed explicitly for programmatic control.

It offers a full suite of endpoints that allow developers to control every aspect of the plugin’s settings, including performing efficient bulk operations to update SEO data across multiple posts, pages, or even sites simultaneously. This API-first approach makes AIOSEO an ideal choice for building the kind of deep, scalable automation workflows discussed in this report.

This difference creates two distinct implementation paths. The AIOSEO path is direct: the automation scripts will target AIOSEO’s dedicated API endpoints. The Yoast path requires a workaround. One option is to use a third-party plugin, such as “SEO Fields API Support,” which acts as a bridge. This type of plugin exposes Yoast’s internal meta fields (e.g., _yoast_wpseo_title and _yoast_wpseo_metadesc) to the writable meta object of the native WordPress /posts endpoint. The other, more fundamental option involves custom development, which is explored in the next section.

For the purposes of building a robust, direct, and feature-rich automation system as per the user query, the AIOSEO REST API is the recommended architectural choice. Its native support for write operations and bulk updates aligns perfectly with the goal of programmatic SEO optimization. The remainder of this report will primarily use AIOSEO as the example implementation, while also providing the necessary context for the custom field approach required for Yoast.

| Feature | Yoast SEO API | AIOSEO REST API | Implication for Automation |

|---|---|---|---|

| Read SEO Metadata | Yes (via yoast_head and yoast_head_json fields) | Yes (via aioseo_meta_data field) | Both plugins allow for programmatic auditing of existing SEO data. |

| Update SEO Metadata | No (Read-only API) | Yes (Full read/write capabilities) | AIOSEO provides a direct path for updates. Yoast requires custom code or a third-party plugin. |

| Bulk Operations | Not supported via API | Yes (Designed for bulk updates) | AIOSEO is architecturally superior for large-scale, programmatic SEO changes. |

| Schema Control | Read-only | Yes (Programmatic control) | AIOSEO allows for dynamic, automated generation and updating of structured data. |

| Redirection Management | Not available via API | Yes (Programmatic control) | Automation scripts can manage redirects via the AIOSEO API, a powerful feature for site migrations. |

1.4. Mastering Metadata: The meta and Custom Field Endpoints

Regardless of the SEO plugin used, the underlying storage mechanism for most post-specific SEO data is WordPress’s metadata system, commonly known as custom fields. Understanding how to interact with this system via the REST API is the key to unlocking programmatic control, especially when a direct, write-enabled plugin API is not available (as with Yoast).

The WordPress REST API allows for the updating of custom fields through the meta object in a POST request to an endpoint like /wp/v2/posts/<id>. However, for security and performance reasons, a custom field is not writable via the API by default. It must be explicitly registered and exposed to the REST API using the register_meta() function.

This function is typically placed within a custom plugin or a theme’s functions.php file and is hooked into the init action. The critical argument is ‘show_in_rest’ => true. When this is set, WordPress includes the specified meta key in the API’s schema, making it both readable and writable.

To create a DIY writable API layer for Yoast SEO, one would need to implement the following:

- Identify the exact meta keys Yoast uses to store its data. The most common ones are _yoast_wpseo_title for the SEO title and _yoast_wpseo_metadesc for the meta description.

- Use the register_meta() function to expose these keys to the REST API.

An example implementation would look like this:

PHP

add_action( 'init', 'register_yoast_seo_meta_fields' );function register_yoast_seo_meta_fields() { register_meta( 'post', '_yoast_wpseo_title', array( 'type' => 'string', 'single' => true, 'show_in_rest' => true, ) ); register_meta( 'post', '_yoast_wpseo_metadesc', array( 'type' => 'string', 'single' => true, 'show_in_rest' => true, ) );}With this code in place, an automation script can now update Yoast’s SEO title and description for a specific post by sending a POST request to https://yoursite.com/wp-json/wp/v2/posts/<post-id> with a JSON body like the following:

JSON

{ "meta": { "_yoast_wpseo_title": "New Programmatically Updated SEO Title", "_yoast_wpseo_metadesc": "This is a new meta description set via the REST API." }}This method effectively bridges the gap left by Yoast’s read-only API, but it underscores the architectural elegance of AIOSEO’s native approach, which requires no such custom registration for its core SEO fields.

II. Architecting the Automation: A Strategic Framework for AI-Driven SEO

With a firm grasp of the programmable API layer, the next step is to develop a strategic framework for automation. Technology is merely an enabler; the true value of programmatic SEO lies in applying that technology to high-impact tasks in a structured, measurable way. Automating for the sake of automation can lead to wasted effort on low-impact activities. A strategic approach ensures that every script and workflow is designed to produce tangible improvements in SEO performance.

2.1. Identifying High-Impact Automation Targets

The first step in any automation project is to identify which tasks are both valuable to automate and technically feasible. Drawing from established on-page and technical SEO best practices, a clear list of prime candidates emerges.

On-Page SEO Automation Targets: These are tasks related to the content and structure of individual pages. They are often repetitive and time-consuming when performed manually, making them ideal for automation.

- Page Title Optimization: Automatically generating or refining page titles to include target keywords and adhere to length constraints (under 65 characters).

- Meta Description Generation: Creating unique, compelling meta descriptions (150-165 characters) at scale for pages that are missing them or have generic, auto-generated ones.

- Image Alt Text Creation: Auditing the media library for images with missing alt text and generating descriptive, keyword-rich alternatives to improve image search visibility and accessibility.

- Internal Linking Analysis: Programmatically analyzing new content to suggest relevant internal linking opportunities from existing articles, thereby improving site architecture and distributing link equity.

- Schema Markup Implementation: Automatically generating and embedding structured data (e.g., JSON-LD for articles, recipes, or FAQs) to enhance search engine understanding and qualify for rich snippets in the SERPs.

Technical SEO Automation Targets: These tasks involve monitoring the overall health and crawlability of the site. They are often data-intensive and require regular execution, making them perfect for scheduled scripts.

- Broken Link Detection: Regularly crawling the site to identify and report on internal and external broken links (404 errors), which negatively impact user experience and crawl efficiency.

- Duplicate Content Monitoring: Running checks to find duplicate page titles and meta descriptions across the site, which can dilute ranking signals.

- Performance Monitoring Triggers: While direct page speed optimization is complex, automation can be used to regularly monitor Core Web Vitals (LCP, FID, CLS) and trigger alerts when pages fall below performance thresholds, prompting manual investigation.

To prioritize these potential tasks, a simple Impact vs. Feasibility Matrix can be employed. Tasks that are high-impact (e.g., fixing missing meta descriptions on thousands of product pages) and high-feasibility (the process can be clearly defined and scripted) should be tackled first. Tasks that are low-impact or low-feasibility (e.g., attempting to fully automate creative content writing) should be deprioritized.

2.2. Defining Inputs, Processes, and Outputs (IPO Model)

To ensure that each automation script is well-designed, robust, and predictable, it is beneficial to apply a structured design pattern like the Input-Process-Output (IPO) model. This approach forces a clear definition of the script’s purpose and mechanics before any code is written.

- Input: What specific data does the script require to begin its execution? This could be a list of post IDs, the raw HTML content of a single post, or a URL to an image file. Defining the input clarifies the data-gathering phase of the script.

- Process: What are the sequential steps the script must perform? This is the core logic of the automation. For example:

- Fetch post content using a GET request.

- Sanitize the HTML to extract plain text.

- Construct a prompt for the AI model.

- Send the prompt to Gemini CLI and capture the response.

- Parse and validate the AI’s output.

- Construct a JSON payload for the WordPress REST API.

- Execute a POST request to update the post.

- Output: What is the final, desired result of the script’s execution? This could be a successful 200 OK response from the WordPress API, a new row in a log file detailing the change, or an email notification reporting an error. A clear definition of the output is essential for error handling and monitoring.

Adopting this structured model for each workflow ensures that the resulting automation is reliable, maintainable, and easy to debug.

2.3. Establishing Baselines and Measuring Success

Automation without measurement is a leap of faith.

To justify the development effort and prove the efficacy of the implemented SEO strategies, it is crucial to establish baseline metrics before deploying any changes and to monitor performance afterward.The process should be methodical:

-

Identify a Target Group: Select a cohort of under-optimized pages (e.g., 100 blog posts with no meta descriptions).

-

Establish Baselines: Before running any scripts, record the key performance indicators (KPIs) for this group of pages over a set period (e.g., the previous 30 days). Essential KPIs include:

- Organic Traffic: The number of sessions from organic search, tracked via Google Analytics.

- Keyword Rankings: The average position of target keywords for these pages, monitored with tools like Semrush, Wincher, or Ahrefs.

- Click-Through Rate (CTR): The percentage of impressions that result in a click, obtained from Google Search Console. This is a direct measure of how compelling the page’s title and description are in the search results.

-

Deploy Automation: Execute the automation script on the target group (e.g., the bulk meta description generation workflow).

-

Measure and Compare: Monitor the same KPIs for the subsequent 30-60 days and compare the post-automation performance against the established baseline. A significant uplift in CTR and organic traffic would provide strong evidence of the automation’s positive impact.

This data-driven approach transforms the automation project from a technical exercise into a measurable business initiative, demonstrating a clear return on investment.

III. The AI Content Engine: Leveraging Gemini CLI for SEO Intelligence

At the heart of the content optimization workflow lies the AI engine responsible for generating intelligent, context-aware, and SEO-friendly text. Google’s Gemini CLI is an open-source AI agent that provides direct, terminal-based access to the powerful Gemini family of models, making it an ideal component for scripted automation. Its capabilities extend beyond simple chat, offering a programmatic interface for sophisticated text generation, summarization, and analysis.

3.1. Configuring Gemini CLI for Headless Automation

To integrate Gemini CLI into an automated workflow, it must be configured to run non-interactively, or in “headless mode.” This allows shell scripts to call the CLI, pass it data, and receive its output without any manual intervention.

-

Installation and Authentication: The first step is to install Gemini CLI, which requires Node.js (version 20+). It can be installed globally using the Node Package Manager (npm):

npm install -g @google/gemini-cliFor authentication in a scripting environment, there are two primary methods. The first is to perform an initial interactive login (gemini command) with a personal Google account, which grants a generous free tier of usage (60 requests per minute, 1,000 per day). For more robust, server-based automation, the recommended approach is to obtain a Gemini API key from Google AI Studio and make it available to the CLI as an environment variable (export GEMINI_API_KEY=”YOUR_API_KEY”).

-

Headless/Non-Interactive Mode: This is the cornerstone of scripting with Gemini CLI. The tool can be executed non-interactively in two ways:

-

Using the –prompt or -p flag: This allows a prompt to be passed directly as a command-line argument.

gemini -p "Summarize the key points of the 2024 presidential election." -

Piping (|) standard input: This is a powerful technique for passing larger amounts of data, such as the full content of a blog post, to the CLI.

cat blog_post.txt | gemini -p "Generate a meta description for the following text:"

These methods are what transform Gemini CLI from an interactive assistant into a programmable component that can be seamlessly integrated into loops and larger automation scripts.

-

Context Files (GEMINI.md): To ensure consistency in the AI’s output, Gemini CLI supports the use of GEMINI.md context files. These are Markdown files that provide persistent, hierarchical instructions to the model. A GEMINI.md file can be placed in the project’s root directory or in a user’s home directory (~/.gemini/). For SEO tasks, this file is invaluable for defining a consistent “house style” for all generated content. For example, a GEMINI.md file could contain:

SEO Content Generation Rules

- All generated meta descriptions must be under 160 characters.

- All generated meta descriptions must be written in an active voice and include a compelling call-to-action.

- All generated image alt text must be descriptive and avoid keyword stuffing.

With this file in place, every prompt sent to Gemini CLI from within that project will automatically inherit these instructions, leading to more reliable and compliant outputs without needing to repeat the instructions in every prompt.

-

3.2. Prompt Engineering for High-Quality SEO Content

The quality of the AI-generated content is directly proportional to the quality of the prompt provided. Effective prompt design, or “prompt engineering,” is the practice of crafting clear, specific, and context-rich instructions to elicit the desired response from the language model.

-

Generating Meta Titles and Descriptions: A robust prompt for this task should provide the full context of the source material and specify all necessary constraints. The script would first fetch the post content, then pass it to Gemini CLI with a well-structured prompt.

-

Example Script Logic:

# Assume POST_CONTENT variable holds the full text of a blog postPROMPT="You are an expert SEO copywriter. Based on the following blog post, generate a compelling and SEO-optimized meta description.Constraints:- The description must be a maximum of 160 characters.- It must accurately summarize the main topic of the post.- It must end with a clear call-to-action (e.g., 'Learn more,' 'Read now,').Blog Post Content:${POST_CONTENT}"META_DESCRIPTION=$(echo "$PROMPT" | gemini)echo "Generated Description: $META_DESCRIPTION"This structured approach ensures the model understands its role, the context, and the specific output requirements, leading to far better results than a simple “summarize this” request.

-

-

Generating Image Alt Text: A similar principle applies to generating alt text. The prompt should include as much context as possible, such as the image’s filename, its caption (if available), and the surrounding text from the article where the image is placed.

-

Example Prompt:

Generate a descriptive and concise alt text for an image. The alt text should be suitable for screen readers and SEO.Context:- Image Filename: 'golden-retriever-catching-frisbee-park.jpg'- Surrounding Text: '...our park is a perfect place for dogs to play. As you can see, this happy golden retriever is having a great time...'Generated Alt Text:

-

-

Content Summarization and Keyword Extraction: Gemini CLI can also be used for analytical tasks. By prompting it to summarize an article into key bullet points or to extract the primary and secondary semantic keywords, it can provide valuable data for refining a content strategy or for use in social media promotion.

3.3. Advanced Use Case: Generating Structured Data (Schema Markup)

One of the more powerful applications of Gemini CLI in an SEO context is the automated generation of structured data in JSON-LD format. Schema markup helps search engines understand the content and context of a page in great detail, which can lead to the awarding of rich snippets in search results.

Manually creating JSON-LD can be tedious and error-prone. Gemini can be prompted to parse unstructured text and convert it into valid schema markup.

-

Example Prompt for Recipe Schema:

Analyze the following recipe text and generate a valid JSON-LD 'Recipe' schema. Extract all relevant fields, including name, description, ingredients, and recipe instructions. The output must be only the JSON-LD code block.Recipe Text:'Grandma's Famous Lasagna. This classic lasagna is made with layers of rich meat sauce, creamy ricotta cheese, and perfectly cooked pasta. To make it, you'll need 1 lb ground beef, 1 jar of marinara sauce, 15 oz ricotta cheese... First, brown the beef in a large skillet. Then, layer the sauce, noodles, and cheese mixture in a baking dish...'A script could capture the resulting JSON output, validate it, and then use the WordPress REST API to inject it into a custom field on the post, which an SEO plugin like AIOSEO could then render in the page’s <head>. This workflow automates a highly technical and high-impact SEO task.

IV. The AI Automation Scripter: Employing GitHub Copilot CLI as a Development Agent

While Gemini CLI serves as the content intelligence engine, GitHub Copilot CLI functions as the “agentic” developer, responsible for building the operational scripts that connect all the pieces of the architecture. Copilot CLI is not merely a code completion tool; it is a conversational AI agent that resides in the terminal. It can understand natural language instructions to plan and execute complex development tasks, including creating and modifying files, and running shell commands. This capability makes it an extraordinarily powerful accelerator for building the automation workflows at the core of this report.

4.1. Introduction to Copilot CLI as an “Agentic” Tool

The fundamental concept behind GitHub Copilot CLI is that of an AI agent that can work on a user’s behalf within their local development environment.

It combines the power of a large language model with the ability to interact directly with the file system and shell, all initiated through a chat-like interface in the terminal.

- Installation and Setup: Similar to Gemini CLI, Copilot CLI is a Node.js application and can be installed globally via npm. An active GitHub Copilot subscription is required.

Bashnpm install -g @github/copilot-cliUpon first run (copilot), the tool will guide the user through a GitHub authentication process. It also implements a “trusted directory” model, asking for permission before it attempts to read, modify, or execute any files within a given project folder, which is a critical security feature.

- Core Functionality: A user can enter a prompt in natural language, and Copilot CLI will reason about the task, formulate a plan (which may involve writing code, modifying files, or running commands), and present that plan for approval. This iterative, conversational workflow allows for the rapid development of complex scripts.

4.2. Generating API Interaction Scripts with Natural Language

The primary role of Copilot CLI in this architecture is to write the bash scripts that will orchestrate the entire SEO automation process. This includes fetching data from WordPress, passing it to Gemini CLI, and sending the results back to WordPress via curl-based API calls. This can be achieved with surprisingly simple natural language prompts.

Walkthrough 1: Generating a Basic GET Request Script

To begin, a developer can ask Copilot CLI to create a foundational script for fetching data from the WordPress REST API, incorporating best practices like using environment variables for credentials.

Prompt:

Write a bash script named ‘get_post.sh’ that uses curl to make a GET request to the WordPress REST API. The script should accept a post ID as its first argument. The target URL should be ‘https://my-wordpress-site.com/wp-json/wp/v2/posts/’. The request must use Basic Authentication. The username should be ‘api_user’ and the application password should be read from an environment variable named ‘WP_APP_PASSWORD’.

Copilot CLI will analyze this request, understand the components (curl, arguments, URL structure, Basic Auth, environment variables), and generate a complete, functional bash script, presenting it for approval before writing it to the get_post.sh file.

Walkthrough 2: Generating a POST Request Script for Updates

Building on the first example, a more complex prompt can be used to generate the script for updating SEO data. This prompt needs to be highly specific about the API endpoint, the HTTP method, and the exact structure of the JSON payload, especially when targeting a specific plugin’s data structure.

Prompt (for AIOSEO):

Create a bash script named ‘update_seo.sh’. This script should update a WordPress post’s AIOSEO meta description. It must accept two arguments: the post ID and the new meta description string. The script should use curl to send a POST request to ‘https://my-wordpress-site.com/wp-json/wp/v2/posts/<id>’. The JSON payload must be structured with a top-level ‘aioseo_meta_data’ object, which contains a ‘description’ key with the new meta description as its value. Handle authentication using the ‘WP_APP_PASSWORD’ environment variable as before.

This prompt’s specificity is informed by the AIOSEO REST API documentation, which states that updates are made via the aioseo_meta_data property in the request body. Copilot CLI can parse this complex requirement and generate the corresponding curl command with the correctly formatted JSON data payload, as demonstrated in curl best practices.

Iterative Refinement:

The power of Copilot CLI lies in its conversational nature. After it generates the initial script, the developer can provide follow-up instructions to improve it.

Follow-up Prompt:

That’s a good start. Now, modify the ‘update_seo.sh’ script to include error handling. It should check the HTTP status code of the curl response and print a success message if the code is 200, or an error message if it’s anything else.

Copilot CLI will then modify the existing script to incorporate this new logic, demonstrating its ability to work iteratively on a codebase.

4.3. Tool Approval and Security Considerations

A key feature of GitHub Copilot CLI is its built-in security model. When a generated script includes a command that can modify the system or execute code (like curl, sed, or node), Copilot will pause and explicitly ask for user approval before running it. This “human-in-the-loop” approach prevents the AI agent from performing unintended or malicious actions.

For fully automated, non-interactive scripts (e.g., those run by a cron job), this interactive approval is not feasible. In these cases, Copilot CLI provides command-line flags to pre-approve certain tools:

--allow-tool 'shell(curl)': This would allow Copilot to use the curl command without prompting for approval during that session.--allow-all-tools: A broader but more dangerous flag that grants approval for all tools for the duration of the session.

These flags must be used with extreme caution. The security implications are significant; for example, globally approving the rm command could allow a malfunctioning or maliciously prompted script to delete files without restriction. The best practice for unattended scripts is to grant permissions as narrowly as possible, allowing only the specific commands required for the task at hand.

The synergy between Gemini CLI and GitHub Copilot CLI is the linchpin of this entire architecture. Gemini acts as the specialized “SEO content strategist,” generating the high-quality text and structured data needed for optimization. Copilot acts as the “developer,” taking instructions in plain English and building the robust, secure, and functional scripts required to deliver that content to the WordPress API. This deliberate division of labor, leveraging each tool for its core strength, creates a workflow that is both highly efficient and exceptionally powerful.

V. Synthesis and Execution: Integrated AI-Powered SEO Workflows

This section synthesizes the preceding architectural components—the programmable WordPress API, the Gemini content engine, and the Copilot scripting agent—into complete, end-to-end automation playbooks. These workflows represent practical, executable solutions for high-impact SEO tasks, demonstrating how the technologies integrate to form a cohesive system. Each workflow includes the objective, a detailed breakdown of the script logic, and the natural language prompts used to orchestrate the AI tools.

5.1. Workflow 1: Bulk On-Page Content Refresh

- Objective: To programmatically identify and update all published posts on a WordPress site that have a missing or sub-optimal (e.g., too short) meta description. This is a common and high-impact task, especially for large sites with legacy content.

- Script Logic:

- Fetch Posts: The script begins by making a series of paginated GET requests to the /wp/v2/posts?status=publish endpoint to retrieve a list of all published posts. It will need to extract the id, content.rendered, and aioseo_meta_data.description for each post.

- Loop and Evaluate: It then iterates through the list of posts. For each post, it checks if the aioseo_meta_data.description field is null, empty, or shorter than a predefined minimum length (e.g., 70 characters).

- Generate New Description (with Gemini): If a post fails the evaluation, the script extracts its content.rendered field, sanitizes it to plain text, and pipes it to Gemini CLI. The prompt will instruct Gemini to generate a new, optimized meta description based on the content.

- Construct and Execute Update (with curl): The script captures the text output from Gemini CLI. It then constructs a JSON payload containing the new description within the aioseo_meta_data object. Finally, it executes a POST request using curl to the /wp/v2/posts/<id> endpoint for that specific post, updating its meta description.

- Log Results: The script logs the ID of each post it updates and the new description that was applied, creating an audit trail.

- GitHub Copilot CLI Prompt to Build the Script:

Build a complete bash script named ‘bulk_meta_refresh.sh’. The script should:

1. Fetch all published posts from a WordPress site at ‘https://my-site.com’ using the REST API and pagination. Use ‘jq’ to parse the JSON and extract the ID, content, and AIOSEO meta description for each post.

2. Loop through each post. If the meta description is missing or shorter than 70 characters, proceed to the next step.

3. For those posts, take the post content, pipe it to ‘gemini -p “Generate a meta description under 160 characters for the following text:”‘, and capture the output.

4. Use curl to send a POST request to update the post with the new meta description. The JSON body must use the ‘aioseo_meta_data’ object with a ‘description’ key.

5. Use the ‘WP_APP_PASSWORD’ environment variable for authentication.

6. Echo the ID of each post that is updated.

5.2.

Workflow 2: Automated Image SEO Audit and Repair

- Objective: To scan the entire WordPress media library, identify all images that are missing alternative (alt) text, and use AI to generate and apply descriptive alt text to improve accessibility and image search rankings.

- Script Logic:

- Fetch Media Items: The script makes paginated GET requests to the /wp/v2/media?media_type=image endpoint to retrieve all image objects from the media library.

- Loop and Evaluate: It iterates through each image object and checks if the alt_text field is empty.

- Generate Alt Text (with Gemini): If the alt_text is missing, the script gathers available context from the image object, such as the title.rendered, caption.rendered, and the filename from the source_url. It uses this context to form a prompt for Gemini CLI.

- Construct and Execute Update: The script captures the generated alt text from Gemini and uses curl to send a POST request to the /wp/v2/media/<id> endpoint, updating the alt_text field for that image.

- Log and Delay: The script logs the ID of the updated image and includes a short sleep delay in the loop to avoid overwhelming the server with too many rapid API requests.

GitHub Copilot CLI Prompt to Build the Script:

Create a bash script ‘image_seo_repair.sh’. It should get all images from the WordPress media API at ‘https://my-site.com’. For each image where the alt_text is empty, it should use the image title and caption as context to prompt Gemini CLI with ‘Generate a descriptive alt text based on this context: [title]‘. Then, it must use curl to POST the generated alt text back to the media endpoint for that image ID. Use environment variables for authentication.

Workflow 3: Dynamic Internal Linking Suggestions

- Objective: This is a more advanced workflow designed to assist content creators. When a new post is being written (i.e., it is in “draft” status), this script analyzes its content and suggests relevant internal linking opportunities from the existing library of published articles.

- Script Logic:

- Input: The script takes the ID of a draft post as an argument.

- Fetch Data: It makes two sets of API calls:

- A GET request to /wp/v2/posts/<draft_id> to retrieve the full content of the draft post.

- Paginated GET requests to /wp/v2/posts?status=publish&_fields=id,title,link to get a lightweight list of all published posts, containing only their ID, title, and URL.

- Analyze and Suggest (with Gemini): The script compiles the draft content and the list of published posts into a single, large prompt for Gemini CLI. The prompt is carefully engineered to request a specific output format.

- Process Output: The script captures the JSON output from Gemini and parses it to present a clean, readable list of suggestions to the user (e.g., printing to the console or writing to a file). This output is for review and manual implementation by the content editor, representing a “human-in-the-loop” approach.

Gemini CLI Prompt for Analysis:

You are an expert SEO strategist specializing in internal linking.

Analyze the following ‘Draft Post Content’.

Based on its topics and keywords, identify up to 3 highly relevant internal link opportunities from the ‘List of Published Articles’ provided.

For each suggestion, provide:

1. The exact phrase from the draft post that should be used as the anchor text.

2. The corresponding URL from the list of published articles to link to.

Your output MUST be a valid JSON array of objects, with each object having two keys: ‘anchorText’ and ‘url’.

—

Draft Post Content:

[Full text of the draft post here]—

List of Published Articles:

—

| Task | Tool | Command/Prompt Structure | Example |

|---|---|---|---|

| Generate Meta Description | Gemini CLI | `cat post.txt |

|

| Generate Alt Text | Gemini CLI | gemini -p "Generate alt text for an image titled 'A sunset over the ocean'" |

Creates descriptive text like “A vibrant orange sunset reflecting over calm ocean waves.” |

| Generate curl Script | Copilot CLI | copilot -p "Write a bash script using curl to POST a JSON object to a URL." |

Generates a complete shell script with placeholders for URL and JSON data. |

| Update Post via API | curl (in script) | curl -X POST -H "Authorization:..." -H "Content-Type: application/json" -d '{"key":"value"}' URL |

Sends the update request to the WordPress REST API. |

| Summarize Content | Gemini CLI | `echo "Long article text..." |

Advanced Considerations and Future-Proofing

Implementing a programmatic SEO architecture is not a one-time setup; it requires ongoing management, monitoring, and adaptation. Moving these workflows from a development environment to a production setting introduces considerations around deployment, reliability, and quality control. Furthermore, the rapid evolution of AI necessitates a forward-looking perspective to ensure the system remains effective and can incorporate future advancements.

Deployment and Scheduling

Once the automation scripts are developed and tested, they need to be deployed in a way that allows for regular, unattended execution.

- Cron Jobs: For tasks that need to run on a recurring schedule (e.g., a weekly audit for broken links or a daily check for missing image alt text), the most common and reliable method is a server-side cron job. A cron job is a time-based job scheduler in Unix-like operating systems. A system administrator can configure a cron task to execute a specific shell script at a precise interval (e.g., every Sunday at 3:00 AM). This “set it and forget it” approach is ideal for routine maintenance and auditing tasks.

- CI/CD Pipelines: For more advanced and event-driven automation, the scripts can be integrated into a Continuous Integration/Continuous Deployment (CI/CD) pipeline, such as GitHub Actions. For example, a workflow could be configured to trigger automatically whenever a new post is pushed to the main branch of a Git repository that manages the site’s content. This workflow could run a script that generates a meta description and suggests internal links for the new post, adding the results as a comment on the pull request. This integrates SEO checks directly into the development and content creation lifecycle.

Error Handling, Logging, and Rate Limiting

Production-grade scripts must be robust and resilient. A script that fails silently or overwhelms the server is worse than no script at all.

- Robust Error Handling: Every API call within a script should be wrapped in error-handling logic. This means checking the HTTP response code from the curl command. A 200 OK or 201 Created indicates success, while a 4xx or 5xx error indicates a problem (e.g., 403 Forbidden due to incorrect permissions, 500 Internal Server Error). The script should be able to identify these errors and take appropriate action, such as retrying the request or logging the failure and moving on to the next item.

- Comprehensive Logging: It is essential to maintain a detailed log of all actions performed by the automation scripts. For a bulk update script, the log should record the timestamp, the ID of the post that was modified, the old value, the new value, and the status of the API call (success or failure). This audit trail is invaluable for debugging issues, tracking changes, and reverting unintended modifications.

- Rate Limiting: Sending hundreds or thousands of API requests to a WordPress site in a rapid burst can overwhelm the server’s resources, leading to slow performance or even crashes. It can also trigger security systems that may block the script’s IP address. To prevent this, bulk operation scripts must incorporate rate limiting. This can be as simple as adding a sleep 1 command inside the main loop, which pauses the script for one second between each API call, spreading the load over time and ensuring the server remains responsive.

Quality Control and the Human-in-the-Loop

While AI models like Gemini are incredibly powerful, they are not infallible. They can occasionally generate content that is factually incorrect, tonally inappropriate for the brand, or simply generic and uninspired. Blindly automating the publication of AI-generated content without any oversight is a significant risk.

The most effective and responsible approach is to implement a “human-in-the-loop” system.

This model positions the AI not as a final author, but as a powerful assistant that accelerates the human workflow.

- For Low-Risk Tasks: Automation can be fully deployed for tasks like fixing missing alt text on a large backlog of images, where having a decent, AI-generated description is significantly better than having nothing at all.

- For High-Risk Tasks: For critical on-page elements like the SEO title and meta description of a high-traffic landing page, the workflow should be modified. The script can generate a set of suggestions which are then saved to a draft, a spreadsheet, or a project management tool for a human editor to review, refine, and approve before they are pushed live. This hybrid approach combines the scale and speed of AI with the nuance and quality judgment of a human expert.

6.4. The Future of Programmatic SEO: Evolving Agent Capabilities

The field of AI is advancing at an exponential rate. The agentic capabilities demonstrated by tools like GitHub Copilot CLI are still in their early stages. The future of this architecture lies in the increasing sophistication and autonomy of these AI agents.

- Extensibility with MCP: Both Gemini CLI and GitHub Copilot CLI support the Model Context Protocol (MCP), which allows them to be extended with custom tools and data sources via MCP servers. This means developers can build their own proprietary tools (e.g., an integration with an internal analytics platform) and make them available to the AI agent, further customizing and enhancing the automation capabilities.

- Multi-Agent Workflows: The product roadmaps for these tools include concepts like subagents and multi-agent workflows. In the future, it may be possible to deploy a team of specialized AI agents: one agent to monitor Google Search Console for ranking drops, which then tasks another agent to analyze the underperforming page, which in turn tasks a third agent to generate and implement on-page improvements, all with minimal human intervention.

By building a foundational architecture on open and extensible platforms like the WordPress REST API and command-line AI agents, developers and SEO professionals can create a system that is not only powerful today but is also well-positioned to incorporate the next generation of AI-driven optimization technologies as they become available.